Table of Contents

- Overview

- Avoid click jack attack: Use X-Frame-Options [Deny]

- Restrict exposed HTTP method - UrlScan tool

- Disabled directory listing and directory traversal: HTTP error 403 vs. 404

- Encrypt connection string in web.config file

- Always set custom error page

- Always pass secure cookie

- Web farm security norms

- Miscellaneous

- Addendum: Security hardening in IIS 6.0 vs. IIS 7.0

- Addendum: HotLinking Resources

- Addendum: Security Best Practices

- Fine Tune Response Headers

- HTTP:Postman Inteceptor and Developers Tool

- Cookies, Smart and vulnerable cookies

- Break it and fix it!

- OWASP Top 10 Commandments YYYY

- Conclusion

- Guru: Troy Hunt

1. Overview

This article helps you build and enable robust web applications with respect to various aspects of securities that need to be taken care while designing a system. The system designed without considering security assessment leads to non compliance and may come under security threats. Such systems are vulnerable to harmful attacks. The guide below will foster the strengthening of applications and mitigate the risk of probable attacks and reduce unauthorized activities. The problem, scenario, and solution statement stated here are .NET centric. I have tried to cover most essential security review items that cause most issues and non compliance.2. Avoid click jack attack: Use X-Frame-Options [Deny]

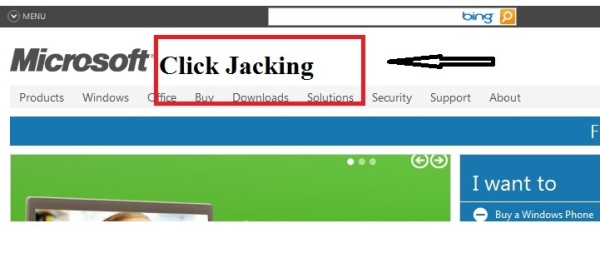

In a nutshell, a click of website can be hijacked by any website using the click jacking technique. The basic idea behind this concept is making use of the z-index property ofDIV and IFrame tags in an HTML page. We can harm the transactions of an actual website with a dummy website placed just above the actual one. What happens here? The malicious website will load the actual website page in its HTML IFrame and put the actual website in the background with transparency set to 'false'. Using this mechanism, the dummy website will replicate/simulate the real website with all non -virtual buttons placed exactly above the real website and helping online users perform the actions on the actual website with information provided by the dummy website with a fraudulent motive.Click jacking technique

Here is the two step implementation:- Keep the target website under a low level z-index, say 5 and

AllowTransparency="false" - Keep the dummy website at a high level z-index, say 10

<html>

<body>

<iframe src="http://www.microsoft.com" allowtransparency="false" style="float; position: absolute;

left: -5px; top: 12px; width: 731px; background: white; height: 259px; z-index: 5;

margin-top: 0px;" border="0" frameborder="0" scrolling="no"/>

<div style="position:absolute; left:0px; top:0px; width: 250px; height: 200px; background:white; z-index:10">

<h1>Click Jacking</h1>

</div>

</body>

</html>

The concept is pretty simple. The phishing website will be placed just above the target website such that whatever action is performed on the dummy website will trigger events on the actual website. This is how the foul play will lead to invalid transactions taking place and the target website will be vulnerable to click –jacking.

Real time scenario

Assume there is an e-commerce site where we can purchase a book online. There will be another website with the exact replica but with a few changes such that users are motivated to transact. A scenario where an e-commerce site will have a button with a Buy caption and a malicious site will have a screen with a masked non–event button ‘Donate’ just placed above it. The moment the user clicks on the masked non-event button ‘Donate’, in reality, it will trigger the low level z-index Buy button. This is how hackers can misuse your website for their purpose.Solution for click jacking websites

The below solution is strictly .NET centric and every technology will have its own solution for this problem. x-frame-options is one area where we can restrict our website from being misused using click jack.Three solutions are available to tackle this:

Solution 1

The code below in the Global.asax file of your web application solution:void Application_BeginRequest(object sender, EventArgs e)

{

this.Response.Headers["X-FRAME-OPTIONS"] = "DENY";

}

Point to be noted here. This will indeed throw an error such as "Error: This operation requires IIS integrated pipeline mode”.Solution 2: This works absolutely fine for all authentication modes

void Application_BeginRequest(object sender, EventArgs e)

{

HttpContext.Current.Response.AddHeader("x-frame-options", "DENY");

}

Solution 3: We can directly set this restriction by adding custom X-Frame-options

Websites are vulnerable to click-jacking which can allow an attacker using Cross Site Scripting (XSS) to trick a user into clicking a malicious link procedure to send out the X-Frame-Option header using IIS.- Open IIS (by running the inetmgr command).

- Go to the website folder and right click on your website, and go to Properties.

- Go to the HTTP Header tab.

- Go to the Custom Header section.

- Click on Add.

- Custom Header Name: X-Frame-Options Custom Header Value: "DENY" (without the quotes) or "SAMEORIGIN".

- If a subdirectory website is there, then IIS will ask you to override this to the subdirectory website.

- OK and restart IIS by running the iisreset command.

References

- Anti Click Jack

- What is X-frameoptions DENY, SAMEORIGIN or NONE http://blogs.msdn.com/b/ieinternals/archive/2010/03/30/combating-clickjacking-with-x-frame-options.aspx

- http://technet.microsoft.com/en-us/security/cc242650

- http://blogs.msdn.com/b/ieinternals/archive/2010/03/30/combating-clickjacking-with-x-frame-options.aspx

- http://msdn.microsoft.com/en-us/library/wce3kxhd.aspx

- http://blogs.msdn.com/b/sdl/archive/2009/02/05/clickjacking-defense-in-ie8.aspx

- https://www.owasp.org/index.php/Clickjacking

- http://blogs.msdn.com/b/ie/archive/2009/01/27/ie8-security-part-vii-clickjacking-defenses.aspx

- http://www.syfuhs.net/category/IIS.aspx

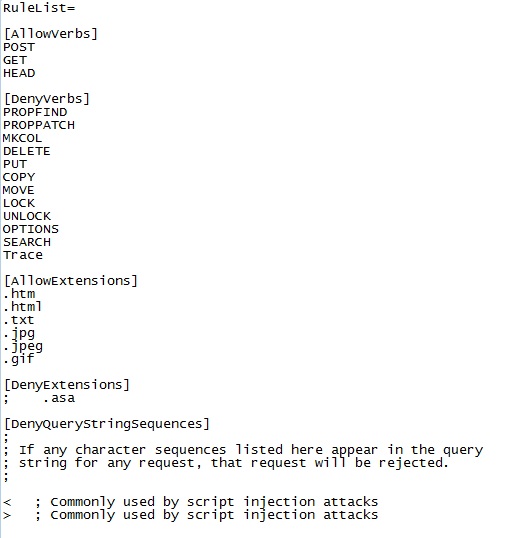

3. Restrict vulnerable HTTP method - UrlScan tool

HTTP methods that can be exploited are enabled. TheOPTIONS HTTP method is enabled. The OPTIONS method can be used in foot printing/profiling the application/server. The answer to this problem is the UrlScan tool.Why UrlScan?

UrlScan tool is a security tool that scans and restricts incoming HTTP requests that are processed by IIS. By blocking specific HTTP requests, UrlScan helps prevent potentially harmful requests from being processed by web applications on the server. This tool enables options to apply security rules and policies that are a must when an HTTP request is passed to or processed by IIS. It is a bit confusing for the first time to understand the URL scan concept for a novice learner. Basically this tool provides a configuration template which helps us define our own rules or can use existing rules as applicable to the application and the business requirement.Advantage

It helps avoid running malicious code, requests that come to IIS and which can be a threat and harmful for the overall website functioning.What does it do?

The rules basically monitor and act as inspectors to restrict harmful incoming requests sent to IIS. But to make this work, we need to install UrlScan in a web server where IIS is a hosting platform. UrlScan comes with default configuration settings. We can modify the configuration as per our requirements and leverage its benefits.Define rules and policies:

- Rules to block SQL Injections

- Rules to set URL format

- Limit length of header values

- Limit max length of URL

- Limit max length of query string in URL

- Limit Request’s Content Length

- HTTP method –Get POST, HEAD, trace

Where do we configure the above ruleset?

All the above rules are presents in the UrlScan.ini configuration and as per our business requirement or compliance, we can reconfigure or add custom configurations in it. The sample configuration settings are given below:

Alternative to UrlScan

UrlScan applies rules to the overall website at IIS level. If we want security norms to be applied to a specific website and a module, then we must look into security code access options in .NET. There are three essential practices that can help us apply security to specific files, directory (URL), and file types. Each of this comes with a specification and requirement of its own.1. FileAuthorizationModule

This basically works with respect to the ACL list of files related to .aspx or .asmx. If we rely on IIS for authentication then we need ASP.NET impersonation. This impersonation works with Windows Identity and it’s based on the identity token passed to IIS. This is all about impersonation. I won’t take much time explaining all this for now. I just want the reader to focus on security code access and spend time understanding the concepts and theory behind it. Mostly we face security enablement issues in MOSS and PPS installations where we need to setup the environment right the first time and then all of it goes with the code and deliverables.public sealed class FileAuthorizationModule : IHttpModule

2. UrlAuthorizationModule

Usually we have three main line items for URL authorization. It needs role based security module in place where we can have domain groups with roles assigned to users for specific business requirements. Coming to Verbs, this is the HTTP method type that can be GET-POST-HEAD-OPTIONS-TRACE-FIND and so on. This is what we saw in the UrlScan tool. Based upon our server environments, we can set this HTTP method type. Say for example we may enable TRACE and Options in the test and development environments whereas we don’t want this to be there in production as it may harm performance and leak information related to the server or configurations that are in place.USER-ROLE-VERBS.

- User *(All authenticated users/? Anonymous users/or defined deterministic users)

- Role (Domain/Admin- Domain/BusinessUser - so on)

- VERBS - HTTP METHOD - GET, POST, HEAD, OPTIONS, FIND, TRACE, etc.

<authorization>

<[allow|deny] users roles verbs />

</authorization>

<authorization>

<allow verbs="GET" users="*"/>

<allow verbs="POST" users="xyz"/>

<deny verbs="POST" users="*"/>

</authorization>

HttpForbiddenHandler: Exclude unnecessary unused file extension

Disable remoting, batch files, executables on internet facing web servers (.rem, .soap, .bat, .exe). Additionally if we know a given set of extension files are not used in our application, we can assign it underHttpForbiddenHandler. Ref: Implement HttpForbiddenHandler in IIS, Securing Web Server.<system.web>

<httpHandlers>

<add verb="*" path="*.mdb" type="System.Web.HttpForbiddenHandler" />

<add verb="*" path="*.csv" type="System.Web.HttpForbiddenHandler" />

<add verb="*" path="*.private" type="System.Web.HttpForbiddenHandler" />

<add verb="*" path="*.rem" type="System.Web.HttpForbiddenHandler"/>

<add verb="*" path="*.soap" type="System.Web.HttpForbiddenHandler"/>

<add verb="*" path="*.asmx" type="System.Web.HttpForbiddenHandler"/>

</httpHandlers>

</system.web>

4. Disabled directory listing and directory traversal: HTTP error 403 vs. 404

The application has a directory listing enabled, allowing users to see the internal view of the application and all pages available. Say for example you've a website NewBee with a directory listing of Website/Image, Website/Script, Website/UI.Normally the user will browse the application pointing to this source website: https://NewBee.com. The site will work as expected.

Senario 1 - If ignorantly you type the URL address https://Newbee.com/UI then it will list all the files under that directory/folder. Doing this, your site is vulnerable to security threats. You allow users to guess the directory structure.

Scenario 2 - Suppose the directory listing is prohibited and HTPP request error code 403 is enabled, then the user will get a message that directory browsing is forbidden. That means the UI folder directory is valid and a UI folder exists in some form. This provides one step accessibility options to the hacker so as to educate himself about the directory structure and files placement.

Remediation and workaround

- Disable directory listing - Check if custom error 403 is set.

- Configure the web server to display an HTTP 404 error page in the cases when a user tries to perform a directory traversal. Display an HTTP 404 error instead of HTTP 403 error as a malicious user can use the HTTP 403 error to map the application structure.

How to configure HTTP 404 error in place of HTTP 403?

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web;

namespace MyNameSpace

{

public class NoAccessHandler: IHttpHandler

{

#region IHttpHandler Members

public bool IsReusable

{

get { return true; }

}

public void ProcessRequest(HttpContext context)

{

context.Response.StatusCode = 404;

}

#endregion

}

}

<httpHandlers>

<add verb="*" path="UI/*" validate="false" type="MyNameSpace.NoAccessHandler"/>

</httpHandlers>

<system.webServer>

<handlers>

<add name="NoAccess" verb="*" path="UI/*"

preCondition="integratedMode" type="MyNameSpace.NoAccessHandler"/>

</handlers>

</system.webServer>

5. Encrypt connection string in web.config file

Never ever keep a clear plain connection string in the web.config file. The risk and consequences of this are self explanatory. All you need to do is follow the below steps and you are through with this.Steps to be followed:

aspnet_regiis -pef "connectionStrings" path

"path" is the path of the physical folder where web.config resides (e.g., aspnet_regiis -pef "connectionStrings" D:\Apps\NewBeeWebsite).aspnet_regiis -pa "NetFrameworkConfigurationKey" "ASPNET"

aspnet_regiis -pa "NetFrameworkConfigurationKey" "NETWORK SERVICE"

aspnet_regiis -pa "NetFrameworkConfigurationKey" "NT AUTHORITY\NETWORK SERVICE"

- Go to Visual Studio command prompt in the “C:\WINDOWS\Microsoft.NET\Framework\v2.0.50727\” path.

- Install ASP.NET using the aspnet_regiis –I command.

- Encrypt Web.Config connection atrings using below command;

- You will get the message “Encrypting configuration section... Succeeded!”.

- Run the following commands:

- Restart IIS.

6. Always set a custom error page

Why a custom error message? Enable the custom error page option in the web.config file. Never ever reveal the source level error message to end users. This will help users to understand the semantics of your code and flow. For any application level exception, it is a good practice to display a custom error page.<customErrors mode="On" defaultRedirect="ErrorPage.aspx" />

7. Always pass a secure cookie

The secure cookies attribute prevents cookies from being sent to the HTTP traffic. Set the SECURE flag on all cookies: whenever the server sets a cookie, arrange for it to set the SECURE flag on the cookie. The SECURE flag tells the user's browser to only send back this cookie over SSL-secure HTTPS connections. The browser will never send a Secure cookie over an unencrypted HTTP connection. The simplest step is to set this flag on every cookie your site uses.<httpCookies httpOnlyCookies="true" requireSSL="true" />

8. Web farm security norms

Secure Viewstate and safeguard its integrity

In form-based authentication, it is important to safeguard the viewstate especially when there are web servers in load balanced mode in a web farm. In such scenarios, requests from clients to servers are unknown and it may hit the request onto the server as per a given threshold user load and it is non-deterministic. The hacker may temper your viewstate and the integrity of the viewstate is hampered. In order to protect the viewstate from getting damaged while in transmit from client to server and vice-versa, we must apply<% @ Page enableViewStateMac=true >. This will generate a machine authentication code for the viewstate generated at page level. This will keep the page level viewstate intact. But again we need to tighten this security when we have a web farm environment. Here we have to maintain another level of integrity.- Level 1: At page level integrity:

<% @ Page enableViewStateMac=true >. - Level 2: If webserver is in web farm mode, then apply common encryption and decryption across all webservers.

machinekey consistent and same so that it has the same encryption and decryption keys.<machineKey validationKey="AutoGenerate "

decryptionKey="AutoGenerate"

validation="SHA1"/>

Session state management

As I said earlier in regards to web farm environments, consecutive/successive requests from the same logged in users may hit different web servers in a web farm. In such cases, integrity of the session having user credentials may be lost and user sessions across web servers will not be maintained and information is lost. This may result in invalid sessions for each successive request. The only workaround is to store session in SQL Server (out of process state management).Reference : http://msdn.microsoft.com/en-us/library/ff649337.aspx.

9. Miscellaneous

Application hardening - A few hard facts that are prerequisites and first level security based application hardening that are must and one has to take care of:- Handle SQL injection. UrlScan also helps prevent SQL injection. Handle SQL injection in SQL scripts as well as on the front end. What is required is deterministic client side validation. Try to use as much client side validation and use server side only when it is required most.

- Always encrypt querystrings in your application. Querystrings expose application data and helps user gather more information to hack the site.

- Try using less hidden fields in the application.

- Encrypt viewstate. Ref: http://msdn.microsoft.com/en-us/library/aa479501.aspx.

- Never keep highly confidential data in cookies.

- Important: Install SSLSCAN.exe to verify weak cipher suites and it shouldn't be below 128 bit. E.g., Preferred Server Cipher(s) SSLv3 256 bits. Download SSL Scan Utility

- Disable application trace and debug mode [false].

10. Addendum: Security hardening in IIS 6.0 vs. IIS 7.0

IIS 6.0 vs. IIS 7.5

The above content is more in line with what we used to have in existing infrastructure setups and most of the applications in production are still in IIS 6.0 and Window Server 2003. As per demand and ongoing migrations, the above security aspects are really taken care of in IIS7. To make this article more useful and better, I’ve added a few contexts on IIS7.5 with respect to radical changes it underwent since the inception of IIS7.0.IIS 6.0 architecture

Internals of IIS 7

| Sr. No | IIS 6 | IIS 7 |

| 1 | IIS 6.0 - it has its own authentication and authorization mechanism and ASP.NET has its own authentication and authorization modes. There is isolation in terms of authentication and authorization. | IIS 7.5 has only authentication option with ASP.NET having only authorization mode enabled. IIS 7.5 has two modes: Classic (which apt IIS 6.0) and Integrated mode where authentication lies on IIS whereas authorization lies in ASP.NET. |

| 2 | IIS 6.0 has anonymous access that exists in users and Guest group IIS_WPG. | IIS 7.5 has anonymous access assigned to the new Windows built-in user IUSR that exists in the user group – IIS_IUSRS. This replaces IIS 6.0 IIS_WPG with IIS_IURS. This group does not require user id/password. There is an option to include application pool identity as an anonymous user. |

| 3 | There are web service extensions to enable non static such as ASP.NET , webdav, server side includes, etc. | This feature is available directly and enabled by default. That is part of CGI and ISAPI.dll restriction that can be set accordingly. |

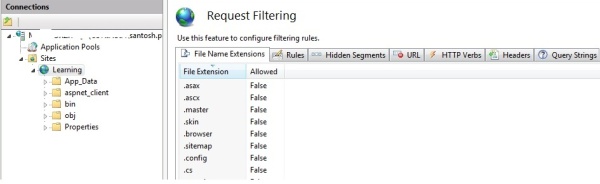

| 4 | We can use UrlScan to set rules to apply restrictions on URL request filtering. | It has built in feature for request filtering. Query strings Header File Name Extension HTTP Verbs Rules - e.g. SQL injection URL |

| 5 | Wild card script mapping available. | Wild card mapping available with classic IIS 6.0 and integrated pipeline mode IIS7. Just look for <modules runAllManagedModulesForAllRequests="true"> |

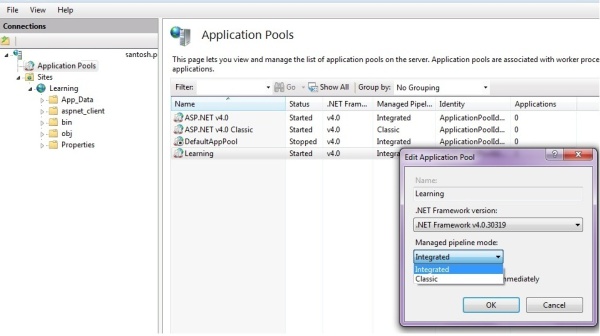

IIS 7 Application Pool- Integrated and Classic modes with the ASP.NET Framework option

IIS 7 request filtering: Alternative to UrlScan

This is just an overview of IIS and there are lots more information available online for reference. This will give a pointer to the reader to delve more into IIS to understand the security implications if not handled or configured properly.

We can filter request to IIS and secure malicious calls to website resources.

- Filter by FileName Extension

- Set Rules- Such example to restrict calls from cross domain.

- Http Verbs: Allow /deny Tracing, Get, Post, Put, Options, Find, trace etc

- Querystring : Set Querystring length and special characters in URL.

- Headers: Set Headers

11. Addendum: HotLinking Resources

Sometimes we have exposed our website resources such as images as part of URL and these resources are used and referred by other website directly. Impact- It increases unrelevant traffic and copyright issue. To prevent this we can use URL rewrite where we can prohibit one from using images from our website source and thus return some restricted watermark to prohibit the same.Restrict Hot linking images or resources using -URL Rewrite- Request Filtering Stop Hot Linking WorkAround

12. Addendum: Security Best Practices

Remove unnecessary Response Header

Remove Server Response Header For.E.g Server Microsoft-IIS/7.5public class CustomHeadersModule : IHttpModule

{

public void Init(HttpApplication context)

{

context.PreSendRequestHeaders += OnPreSendRequestHeaders;

}

public void Dispose() { }

static void OnPreSendRequestHeaders(object sender, EventArgs e)

{

// remove the Server Http header

HttpContext.Current.Response.Headers.Remove("Server");

//remove the X-AspNetMvc-Version Http header

HttpContext.Current.Response.Headers.Remove("X-AspNetMvc-Version");

}

}

Remove Response Header:X-Powered-By - Indicates that the website is "powered by ASP.NET."

<httpProtocol >

<customHeaders >

<remove name="X-Powered-By" />

</customHeaders >

</httpProtocol >

Remove Response Header:X-AspNet-Version - Specifies the version of ASP.NET used.

<system.web>

<httpRuntime enableVersionHeader="false"/>

Removing X-AspNetMvc-Version In Global.asax.cs add this line:

protected void Application_Start()

{

MvcHandler.DisableMvcResponseHeader = true;

}

MVC Cross Site Request Forgery-

- Html.AntiForgeryToken()

- ValidateAntiForgeryToken

- Salt base Html.AntiForgeryToken("SaltValue")

XSS attacks ASP.NET

HTML Encode Html.Encode(feedback.Message) AntiXss library @Encoder.JavaScriptEncode Javascript encoding Helper class @Ajax.JavaScriptStringEncode http://weblogs.asp.net/jongalloway//preventing-javascript-encoding-xss-attacks-in-asp-net-mvcSafegaurd Controller

Safegaurd Controller and Action- AllowAnonymous

- Authorize

13. Fine tune Response Header

HTTP Strict Transport Security (HSTS)

Courtesy by OWASP The HTTP X-XSS-Protection response header is a feature of Internet Explorer, Chrome and Safari that stops pages from loading when they detect reflected cross-site scripting (XSS) attacks. Although these protections are largely unnecessary in modern browsers when sites implement a strong Content-Security-Policy that disables the use of inline JavaScript ('unsafe-inline'), they can still provide protections for users of older web browsers that don't yet support CSP.<system.webServer>

<httpProtocol>

<customHeaders>

<add name="Strict-Transport-Security" value="max-age=31536000"/>

</customHeaders>

</httpProtocol>

</system.webServer>

Important Caveat

You have to ensure that this header must be assessed with redirect rules or any domain specific url with http that conflict with this settings. Courtesy by Scott Hanselman Refer this explanation. What was happening with my old (inherited) website? Well, someone years ago wanted to make sure a specific endpoint/page on the site was served under HTTPS, so they wrote some code to do just that. No problem, right? Turns out they also added an else that effectively forced everyone to HTTP, rather than just using the current/inherited protocol. This was a problem when Strict-Transport-Security was turned on at the root level for the entire domain. Now folks would show up on the site and get this interaction: • GET http://foo/web • 301 to http://foo/web/ (canonical ending slash) • 307 to https://foo/web/ (redirect with method, in other words, internally redirect to secure and keep using the same verb (GET or POST)) • 301 to http://foo/web (internal else that was dumb and legacy) • rinse, repeat What's the lesson here? A configuration change that turned this feature on at the domain level of course affected all sub-directories and apps, including our legacy one. Our legacy app wasn't ready. Be sure to implement HTTP Strict Transport Security (HSTS) on all your sites, but be sure to test and KNOW YOUR REDIRECTS.OWASP HTTP Strict Transport Security (HSTS)

X-Content-Type-Options

The X-Content-Type-Options response HTTP header is a marker used by the server to indicate that the MIME types advertised in the Content-Type headers should not be changed and be followed. This allows to opt-out of MIME type sniffing, or, in other words, it is a way to say that the webmasters knew what they were doing. <add name="x-content-type-options"> From <https: developer.mozilla.org="" docs="" en-us="" headers="" http="" web="" x-content-type-options="">X-XSS-Protection: 1; mode=block

The HTTP X-XSS-Protection response header is a feature of Internet Explorer, Chrome and Safari that stops pages from loading when they detect reflected cross-site scripting (XSS) attacks. Although these protections are largely unnecessary in modern browsers when sites implement a strong Content-Security-Policy that disables the use of inline JavaScript ('unsafe-inline'), they can still provide protections for users of older web browsers that don't yet support CSP. From <https: developer.mozilla.org="" docs="" en-us="" headers="" http="" web="" x-xss-protection=""><add name="x-content-type-options"> <httpProtocol>

<customHeaders>

<remove name="X-Powered-By" />

<remove name="X-Content-Type-Options" />

<add name="X-Content-Type-Options" value="nosniff" />

<add name="X-XSS-Protection" value="1; mode=block" />

</customHeaders>

</httpProtocol>

14. HTTP:Postman Inteceptor and Developers Tool

I mostly use postmand Interceptor and developers tool to do any website security assessment. This is very handy tool unlike when come to fiddler. Trust me postman Interceptor is easy to use.Below is the steps to enable interceptor. Install Postman app in chrome and postman interceptor plugin. One is app and other is browser interceptor to capture request and response of any website. It intercepts everything that is incoming and outgoing calls. Even you can filter the xhr or host level route to capture specific calls. Install app and interceptor in chrome. Please refer screenshots. Enable the browser interceptor and then open postman. In postman aswell there is knob to capture chrome browser request and response. App intercepts chrome browser urls and capture http traffic in history. You can open one of the history and can modify get/post etc request body or request header. You can tweak data in header and request url or body to play with request. This will help you analyse and design security assessment testcases at functional level. Moreover you can save this history in cloud as per your personalisation. Now Developer tool F12, capture screenshot, look into cookies in application section. Switch to offline mode check the behaviour of site and many more. I encourage developer to play more with these developer tool feature.Select XHR under network tab to capture ajax or angular calls and many more stuff. Its all there to make our life easy.Know How Postman Interceptor

Step1: Install these Chrome plugins and app

Step2: Switch On Interceptor button

Step3: Lookup History and play around

Know How Developer Tool F12

Read Ajax XHR request

Throttling- Offline! Preserve Logs! Disable Cache

Sometimes you want to clear cache and reload request. Easy way to do it see the screenshot. If you want to simulate 3G, 4G speed or even offline mode you can do it in chrome. Very interesting one is XHR request which one can preserve across the page request.15. Cookies, Smart and vulnerable cookies

If you have transactional site with form login then definately form cookies in asp.net can be seen as .ASPAuth and session cookies would be .Asp.Net_SessionId. You have to be careful while dealing with them. You have to understand the importance of session timeout and form authentication timeout. Just intercepts these two cookies details and you can play with website core functionality.Any thirdparty tool can easily intercepts your cookies if you are using non http cookie using document.cookie.If you are using .Net Identity authentication you may see different cookie variable. It is very important that you handle session timeout with respect to server processing of session and session timout when serve through ajax calls. Please mark my words these are two different scenario. One is purely server side processing where session timeout and other one is through ajax call on sunmit button wherein you call server api to return response. In both scenario session expired and handling must be done carefully.There could be scenario where you have to balance these session vs form timeout. Like shopping cart where session is created and later authenticated with form or some other methodologies. public void OnAuthorization(AuthorizationContext filterContext)

{

var isAuthenticated = filterContext.HttpContext.User.Identity.IsAuthenticated;

if (!isAuthenticated)

RedirectToAccessDeniedPage(filterContext);

var userContext = Some Session;

if (userContext == null)

{

if (filterContext.HttpContext.Request.IsAjaxRequest())

{

filterContext.HttpContext.Response.StatusCode = 440;

}

else

{

if (!HttpContext.Current.Response.IsRequestBeingRedirected)

{

//either redirect to login or logout

WebUtil.Redirect(returnUrl);

}

}

return;

}

}

Cookies afterall cookies, must be handle with care especially when using output cache ensure you have user specific variable to output cache at page level when using session based approach.16. Break It and Fix It!

50% of the cases when url querystring length exists the predefined length system fails with 500 error. System unavailable or YSOD. Handle this scenario very well. Negative test your website with this.225 char path (OK) https://com.com/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

226 char path (BAD): https://abc.com/AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA AAAAAAA

17. OWASP Top 10 Commandments YYYY

- OWASP Top 10 2017 OWASP TOP 10 2017

- OWASP Top 10 2013 OWASP TOP 10 2013

18. Conclusion

It’s good to incorporate the necessary key security best practices during design phase thus ensuring the system is not at risk and at the same time it is hack-resilient. The references given in this article are really very informative and I insist the reader take some time going through them. I hope I justified the reader's expectation and this must have helped them in a good way.19. Guru: Troy Hunt

C is for cookie, H is for hacker – understanding HTTP only and Secure cookiesReferences

- Secure Web Server

- Building Secure ASP.NET Pages and Controls

- MSDN SQL injection 1

- MSDN SQL injection 2

- SQL injection 3

Document Version

- **Added reference for Viewstate encryption, dated 30 Nov 2011.

- **Added reference for SQL injection, dated 1 Dec 2011.

- **Added reference for web farm security considerations, dated 5 Dec 2011.

- **Added reference for Best Practice/Hot linking, dated 6 Aug 2014.

- **Added custom code for Server details response header, 9 Aug 2016.

- **Added OWASP Top 10 , tools and negative test case.

No comments :

Post a Comment